Definitions:

- Vulnerability testing: identifying security flaws (all of them, it is hoped).

- Penetration testing: identifying and attacking vulnerabilities (maybe the worst ones,

maybe just a sample, maybe all of them).

- Bug-bounty hunting: finding some vulnerabilities (probably not systematically or all of them)

and exploiting each for separate reward.

Becoming a Bug-Bounty Hunter

Ceos3c's "The different Phases of a Penetration Test"

Katerina Borodina's "How to Learn Penetration Testing: A Beginners Tutorial"

hmaverickadams / TCM-Security-Sample-Pentest-Report

PTES's "Penetration Testing Execution Standard"

OccupyTheWeb's "Become a Hacker"

Sebastian Vargas's "Different Angles of Cybersecurity"

BehiSecc / First-Bounty

djadmin / awesome-bug-bounty / Getting Started (huge; don't get lost in here)

Guru99's "Free Ethical Hacking Tutorials: Course for Beginners" (a little stale, but worthwhile)

The Cyber Mentor's "So You Want to Be a Hacker"

The usual model is "pay for results": hacker gets paid if they find a problem. Bugcrowd also is rolling out "pay for effort": person gets paid like a contractor/consultant for running tests with a defined coverage and skill level.

Is 'pay for results' a fair deal ? Can a bounty-hunter make a living ?

Depends on the competence and intentions of the bounty-hunter (and the following is written from a US perspective):

- Hobbyist or side-hustle:

Bug-bounty hunting is a great way to learn all kinds of fascinating stuff. Money would be nice, but maybe secondary. The challenges, and tiny bits of bragging rights, add to it.

About "I haven't found anything, is it worth it ?", from people on reddit /r/BugBounty 2/2022:

If you look at the programs you get invited on, there are hundreds of people looking at them at the same time, all doing the same. For example, I was invited to a duplicated XSS bug report for a private VDP (no money) that the customer didn't bother to fix because it had little impact, and in only a couple of months I got messages showing that a dozen more people had reported the same bug and been added to it to show it was a dupe. And, again, that is for a private program that doesn't pay any money, just imagine the amount of competition you get in public programs like Verizon.

...

Life's short. If you aren't enjoying it, stop. Just spend some time catching up on hacking updates and such. If you find yourself missing it, come back.

...

I've been at it for almost a year and the two reports I made were both duplicates. So I can certainly see the "is it worth it" mentality. It's discouraging at times, but the thing I keep seeing everywhere is to give it time. Like others mentioned I have a day job and so that makes it easier. Are you enjoying it as a hobby? if so, great, keep having fun and I'm sure the bounties will come. If you're not enjoying it, take a break. I've found sometimes a break re-energizes me - InfoSec beginner:

Bug-bounty hunting is a way to get started in an IT career, when you have no experience and no one will hire you. If you can add "I know how to use Burp Suite and nmap" and "know HTML and JavaScript" and "found web app bugs of types A and B" to your resume, that's a foot in the door. Some money would be good, and the learning is key.

It also would help if you did some open-source programming projects, had a GitHub presence, had a personal web site, and a LinkedIn profile. Establish a professional appearance and writing style; you're not trying to look and talk like some tough-guy teenager doing this for the lulz.

If eventually you want to get a job at a specific big company, maybe focus on testing their apps as a bug-bounty hunter. When you go interview for a job there, you'll be able to say "I already know your apps inside and out".

harisqazi1's "Entry-Level Cybersecurity Job Resource"

Adam Ruddermann's "How To Use Bug Bounty To Start A Career In Silicon Valley" (video)

Getting certificates ? Paul Jerimy's "Security Certification Roadmap"

- Mid-performing bounty-hunter:

Maybe you've become pretty good at networks or web apps or something. At this point, companies might be underpaying for your bug-bounty work. You might work fairly hard to make $1000/week, when someone with your skills could make 2x that (plus benefits) in a US corporate job running networks or firewalls or something. The corporate work might not be as exciting, but you have to decide if you want to stick with bounty-hunting or change to a salaried career.

From discussion on reddit /r/AskNetsec 3/2019:> Recently, I've been participating in bug bounty programs

> full-time and have been pondering a more legitimate/stable career

> in security as a result. I've reported 18 valid vulnerabilities

> in the past two and a half months, and have made a little less

> than $10,000 (I'm seriously not trying to brag or anything,

> I just want to paint an accurate picture of my situation).

> During this time one of those bugs was actually a vulnerability

> in an F5 Networks product which I also responsibly disclosed

> to them and got a CVE for. Hunting for bugs is basically my

> full-time job right now.

>

> The big caveat I think, though, is that I don't have a degree/certs.

> I've never taken classes for anything related to computers. I also

> have never worked professionally in any infosec/programming field

> (I've worked as a fry-cook for my only real job). I do know how to

> program, though, as I have released 6 or 7 iOS apps through my own

> company. But, they're all pretty simple, and not very impressive.

>

> My question is, can I use this experience to start a career in security?

> If so, how? I would really like to get out of doing bug bounties sooner

> rather than later, as they are simply not a reliable source of income.

> Even though I've had some great successes, this month I could find

> nothing or just get a bunch of duplicates, and make $0. However, I feel

> not having a formal education and no certs will really hamper my

> chances of getting employed anywhere. Is this true? Have any of

> you been someone who only had bug bounty experience and got a job

> in the field? I'm willing to learn anything that is necessary to

> get there, because I finally feel like I've found the field I want

> to be in for the rest

> of my career.

IMO, this definitely counts as experience! Unfortunately, I would not say this is a magic bullet since it's very common for employers to want some type of degree. Depending on the organization, this might be a deal breaker. But I do know plenty of people that have badass roles without any degree.

I would still encourage you to apply for jobs that look appsec-related or interesting to you, though. Don't stress too hard about not meeting all the requirements listed on a listing.

...

I can't really speak for other employers, but I look at experience first. Last guy I hired doesn't have a degree.

...

I personally LOVE seeing bounty experience (with successful payouts), as to me it indicates a variety of things, such as:- You're willing to put your own time into doing the work

- You are finding bugs worth money to someone

- You have experience looking at real applications

...

Why don't you spend a few months getting your Network+ and Sec+? You obviously have the aptitude and drive for demanding technical work. Certs like those are not absolutely necessary but it sends a signal to potential employers that you know some basics.

I'd say those two certs plus the bug bounties would definitely be enough for some SOC director or Software Development manager to give you a shot. Once you get some actual experience, those bug bounties would serve as a big differentiator between you and people that just had the certs or some college classes.

...

I'm a hiring manager and have moved into a position where the team is small and lean and we perform a myriad of tasks from technical to business development.

I would prefer a candidate with a degree as it tells me they were forced to write at one point, they've had to take classes they were not exactly thrilled with in their degree plan, and more importantly they stayed the course for a set amount of time.

In lieu of a degree I would prefer an overabundance of experience and proven performance along with the ability to speak intelligently to peers, clients and within other business units. There is no time for the brilliant a**hole, the spotlight ranger that lives on their own island, or the socially inept that clams up whenever they have to brief.

Since you are new, gain experience any way you can. Learn adversarial tradecraft to round out your skillset. Spend time building your callback infrastructure and test it. As you gain more experience and can move into roles, get your degree in something so you can bypass HR filters and do what you really want.

Sohaib Adil's "A Day in the Life of a Cybersecurity Professional"

- High-performing bounty-hunter:

Again, companies might be underpaying for your bug-bounty work. You might work fairly hard to make $2000/week, when someone with your skills could make 2x that (plus benefits) in a US corporate job managing a security team or running a SOC or something. Maybe you could make 3x that (without benefits) as a top consultant, brought in for specific jobs or in emergencies.

Trail of Bits' "On Bounties and Boffins"

Jon Martindale's "Meet the bug bounty hunters making cash by finding flaws before bad guys"

Shaun Waterman's "The bug bounty market has some flaws of its own"

Sean (zseano)'s "Are you submitting bugs for free when others are being paid? Welcome to BugBounties!"

Shaun Nichols' "I won't bother hunting and reporting more Sony zero-days, because all I'd get is a lousy t-shirt"

Gwendal Le Coguic's "Cons of Bug Bounty"

Wladimir Palant's "If your bug bounty program is private, why do you have it?"

Sylar's "When Bug-Bounty becomes cheap/free Pentest(s)"

"5 times in a row with different companies claimed the vuln was already found but not fixed yet so no reward."

"Over 300,000 hackers have signed up on HackerOne; about 1 in 10 have found something to report; of those who have filed a report, a little over a quarter have received a bounty"

from Matt Asay's "Bug bounty programs: Everything you thought you knew is wrong"

Some good news for bug bounty-hunters:

- More and more companies are rolling out apps.

- There are lots of legacy apps out there.

- Apps are becoming more complex and vital.

- Huge amounts of code are written every day, in proprietary apps and open-source modules and advertising scripts etc.

- More and more types of devices are becoming internet-enabled.

- New devices and features are introduced every year.

- Companies are having trouble hiring experienced people, and keeping up with threats and vulns and patching and new development and QA and maintenance.

- The penalties (in reputation, regulation, and money) for data breaches are increasing.

Learn

- Basics of: operating systems, networking, programming, scripting, databases, crypto,

hardware, app types, app structures, servers.

- Technologies: specific operating systems, programming languages, web app structures, databases.

- Tools: monitoring/analysis tools, penetration tools, scripts.

- Techniques/strategies: scanning, fuzzing, common attacks.

- Legalities: don't try out these tools and techniques on some network or server you don't have permission to attack.

You can't learn or know everything, so you'll have to focus on some areas and mostly

ignore others, for now.

You can't learn or know everything, so you'll have to focus on some areas and mostly

ignore others, for now.

LiveOverflow's "The Secret step-by-step Guide to learn Hacking" (video)

OccupyTheWeb's "The Essential Skills to Becoming a Master Hacker"

Vickie Li's "Mastering the Skills of Bug Bounty"

About pentesting as a career-path, from /u/unvivid on reddit 12/2018:

Learn IT Operations/Engineering. Pen testing is about using operations/dev tools in creative ways, about abusing trust relationships. Most people I know that are good/great have background in IT ops and know how to maneuver in those environments. They understand the challenges that operations has and don't beat on them. I'm not saying that people fresh out of school can't become great pentesters, they definitely can -- I know several. But shore up your lack of operational knowledge by building/testing/developing/engineering/Architecting. Don't focus solely on security. Deep dive IT Operations, know how to troubleshoot, know how to sysadmin and engineer networks. Know why things are built wrong/right -- understand what social/political/financial pressures drive companies. Build social skills, learn to communicate and think objectively. That's what gets people into cool jobs. ...

...

... Learn the Windows side, in passing at least. At the highest levels nearly everyone I know is OS agnostic. Yes we all love to sh*t talk MS, but nearly every environment I pentest is 90% Windows. Cloud has changed that somewhat and the winds are shifting. But knowledge of both stacks is good to have. I started my career as a Windows Sysadmin and I'd say that experience was a huge part of what got me into my first security gig. Tons of people love to sh*t on Windows, but it pays the bills and the days of the admin that couldn't script or just clicked through things is coming to an end.

My thoughts:

My thoughts:

- You have to learn how things work, before you can break them.

- Press forward on all fronts simultaneously: learn some OS stuff, some

programming language stuff, some tool stuff, read some hacking tutorials or books, do some challenge stuff.

When you get bored with one thing, switch to another for a little while.

- There is no secret shortcut, and everyone's paths and strengths are different.

- Enjoy the learning and experimenting. Even if you never do bounty-hunting

and make money, the new knowledge and skills can pay off for you.

- Even "failures" are useful. Just can't understand crypto, or assembly code ? Well, focus on

other areas that you DO understand.

From Avi Iyer's "Bug Bounty - Finding The First Bug":

"In reality, this is not an entry level field."

What are your strengths ?

- Familiarity with product X or company Y or industry Z or govt agency G or regulation R ?

- Familiarity with technology T ?

- Familiarity with programming in languages A and B ?

- Fluency in human language L ?

- Located in country C ?

- Social engineering ?

- Familiarity with tools P and Q ?

- Assets such as multiple machines or a lab or lots of GPUs ?

- Target you already own, such as a particular router or webcam or game console ?

- People you can team up with ?

Understand the legalities and rules of engagement

- Do you have to register ahead of time with your target ?

- Have to sign an NDA ?

- Get exact company and site information. There are lots of companies and apps with similar names, you don't want to attack the wrong one.

- Secure ways of contacting company reps ? Encrypted email ? Passcodes when phoning in ?

- Emergency contact if you accidentally crash something ?

- Would you be paid for penetration (exploit) or for coverage (testing) ?

- Does the target set boundaries (scope) for the testing ?

- Does the scope include third-party services and servers ?

- Does the scope include back-office/internal apps and servers ?

- Are you allowed to put an exploit on a user's computer ?

- Does the scope exclude various known vulnerabilities from the testing ? What is the list ? Will you be notified if the list changes ?

- Does the scope exclude particular pages/functions from the testing ? Messing with their account sign-up, password-reset, or "Contact Us" pages may flood their Support people with tickets or emails, for example.

- Does the scope exclude various tools/scanners from the testing, because they generate too much load on the network/servers ?

- Is there a time limit ?

- Does the target define what they would like you to do to prove a vulnerability ? They probably don't want you to dump the whole database or delete a user's account, for example.

- Would you be paid for finding some major functionality bug, or some major violation of a computer standard or legal regulation the app claims to comply with ?

- Will you have access to manuals, a normal user account, training materials, license keys ?

- Can you get a copy of the application to install locally, on your machine(s) ? Or at least get a demo or trial version ?

- If there is some device involved, can you get a loaner ?

- If this is a retail application, will you have a way of creating and completing and returning fake orders, or paying with funny-money ?

- Will you have access to source code and network topology documents (white-box testing) or not (black-box testing) ?

- Will you be told what kinds of internal testing have already been done ?

- Will the target be a testing-only system, or a production system with real users and data ?

- Is your testing supposed to remain undetected ?

- Can the target whitelist your IP address, so you don't get blacklisted (for more than just this target) after doing suspicious traffic ?

- Don't do anything that will violate TOS with your ISP or VPN or other services.

- Don't do anything that will cause a safety hazard. Does the target control door-locks, or industrial or medical equipment, or emergency-response information ?

- Don't do anything illegal.

- Avoid conflicts of interest, such as trading the stock of a company you are testing.

- Avoid conflicts of interest with your day job; don't use day-job equipment or tools or time to do your hunting, don't hunt on competing companies, etc.

- Know what to do if you suddenly see personal info (PII) for some user, or something that violates HIPAA, DFARS, FERPA, GDPR, etc.

- Know what to do if you see a way to crash a system or scramble data or deny service to all users.

- Know what to do if you see a way to steal money.

- Know what to do if you find something illegal on the target system.

- What happens if you exploit something (dump database into a file, maybe) and then someone unknown steals the data (grabs the file) ?

- You may never be allowed to disclose information you determine about the target's network or servers or apps or data, even if you find no vulnerabilities.

- How to disclose ethically ?

- How to disclose effectively ?

Interesting list of what is not a bug, in Facebook: Facebook's "Commonly submitted false positives"

Vickie Li's "Out of Scope"

Strategies for choosing a target

- Pick a target that has money.

- Pick a target that has a bug-bounty program.

- Pick a target that has a bug-bounty program that pays money.

If it says "Type: Responsible Disclosure" or "Vulnerability Disclosure Program" (VDP), it doesn't pay money.

But starting in a "kudos-only" program can help you build a track record and tools, and maybe get invited to a private for-money program. - Pick a target for which security and correctness are important.

- Pick a target that matches your strengths.

- Pick a target that you find interesting.

- Pick a target that isn't well-tested by other bug-bounty hunters.

(There is competition; Bugcrowd has some 100K accounts, HackerOne has 300K.) - Pick a target with lots of configurations or plug-in options; combinations may be lightly tested.

- Pick edge-cases or unusual configurations or sub-domains that may have less test coverage than the mainstream, flagship product.

- Pick a target that releases new software frequently; they may not be testing well. Or:

- Pick a target that hasn't released a new version in quite a while; they may not be patching known problems in dependencies.

- Pick a target that tends to write lots of custom code, not just use standard products and libraries.

- Pick a target that went through a big transition, such as a big merger (look for mergers and acquisitions on wikipedia) or big layoffs.

To me, the "money" and "security and correctness are important" items generally spell "corporate or govt apps" these days. There aren't going to be big bounties for finding some text-formatting bug in MS Office, or a play-flaw in some game, or bugs in open-source software such as Linux or Node packages. (Except see Julia Reda's "In January, the EU starts running Bug Bounties on Free and Open Source Software".)

The trick probably is to find a target small enough that it hasn't been picked over by a lot of hunters, but big enough to have a bounty program.

Dmitriy's "Economics of the bug bounty hunting"

Success stories including some strategy:

Maycon Vitali's "Talk is cheap. Show me the money!"

"Want an easy way to find new bug bounties? Search for the term 'bug bounty' on Indeed or LinkedIn Jobs. You will see public AND private bounty programs."

from tweet by Paul Seekamp.

- Look at the site for some app you already know, to see if they have a bug-bounty program.

- Look in Bug-Bounty Programs

- Smaller bounty programs or smaller apps may have less competition.

- Extremely large companies (Google, Facebook, Amazon) can be worlds unto themselves,

using libraries and even languages they developed themselves, or using

standard patterns across all their apps. Invest some time in understanding their

terminology and patterns. But expect lots of competition, and expect that the

internal security team has caught the most obvious problems.

Google:

Daniel Stelter-Gliese's "Best Of Google VRP 2018" (video)

Google Bughunter University

- Look for recent bounty writeups or disclosures for the target.

Get a sense of how many people are working on the target and what kinds of problems they've found already.

Figure out resources for the given target

- Software and version numbers.

- Source code.

- OSINT about their network and software. Articles, manuals, Wiki / KnowledgeBase / TechSupport, Google, Shodan, YouTube, Facebook page, etc.

- Information in previous bug-reports.

- Dependencies / supply-chain.

- Monitor the target over time, looking for new releases.

Luke Rixson's "Hacking how-to's: Developing your process"

Barrow's "How to Organize Your Tools by Pentest Stages"

Occupy4eles's "Use Magic Tree to Organize Your Projects"

OccupyTheWeb's "The Hacker Methodology"

More about this: Reconnaissance

How to report effectively

- Re-read the allowed scope and known (excluded) vulnerabilities, to make sure you're okay.

- Try to find the biggest scope for the bug. Multiple browsers, multiple OS's, desktop and mobile, multiple versions, multiple countries, multiple users, etc.

- Document clearly, with exact URLs and with pictures and video.

- Explain exactly how to reproduce, with exact URLs etc, for technical audience (developers, QA).

- Explain the severity and effects, for a non-technical audience.

SheHacksPurple's "Security bugs are fundamentally different than quality bugs"

After reporting

Your bug will have to be:

- triaged,

- validated,

- evaluated,

- fixed,

- fix tested,

- fix deployed,

- then maybe publicized.

What to expect from bug-bounty hunting

- A long learning period, drowning in information and not knowing what you're doing.

- Starting out on programs that pay no money at all, just kudos or credit or swag. (Often called a "vulnerability disclosure program".)

- Long periods of time with no hits. This could be you failing, if other people are finding bugs in the same apps. Or it could just be that the apps have no security bugs.

- Lots of competition, especially for the easy/beginner bugs.

- Companies that don't agree that your bug is important.

- Companies that don't pay as much as you think they should.

- This will be a side-job/hobby, not your full-time job, unless you're a wizard.

kongwenbin's "A Review of my Bug Hunting Journey"

Marcin Szydlowski's "Inter-application vulnerabilities and HTTP header issues. My summary of 2018 in Bug Bounty programs."

cinzinga's "Two Years of Bug Bounty Hunting"

Trail of Bits' "On Bounties and Boffins"

Erin Winick's "Life as a bug bounty hunter: a struggle every day, just to get paid"

Daniel Kelley's "The Reality Of Full-Time Bug Bounty Hunting"

phwd's "Respect yourself"

Caleb Kinney's "How to Fail at Bug Bounty Hunting" (video)

Frans Rosen's "Eliminating False Assumptions in Bug Bounties" (video)

InsiderPhD's "Finding Your First Bug" series (video)

InsiderPhD's "Low Competition Bug Hunting (What to Learn)" (video)

Shubham Shah's "So, you want to get into bug bounties?"

Sam Curry's "Don't Force Yourself to Become a Bug Bounty Hunter"

Manasjha's "Pushing yourself through hard hunting days"

radekk's "5 mistakes to avoid on the bug bounty program"

HakLuke's "10 tips for crushing bug bounties"

More about competition:

I see several Facebook groups full of guys in India, Pakistan, Bangladesh who

are running tools and pushing buttons and trying to figure out what they're doing.

Probably there are a lot more on Twitter and other places, and from China and Nigeria

and Philippines etc.

They have access to the same internet and same tools that you do. If all you learn how to do is run scans and catch very simple bugs, you will be competing with those guys.

Companies are moving to application frameworks instead of lots of custom code. Those frameworks probably do the basics (authentication, input sanitizing, URL-checking, permissions, database access, etc) fairly safely. If all you learn is how to catch simple bugs, you may be pursuing a dwindling pool of bugs.

They have access to the same internet and same tools that you do. If all you learn how to do is run scans and catch very simple bugs, you will be competing with those guys.

Companies are moving to application frameworks instead of lots of custom code. Those frameworks probably do the basics (authentication, input sanitizing, URL-checking, permissions, database access, etc) fairly safely. If all you learn is how to catch simple bugs, you may be pursuing a dwindling pool of bugs.

Focus

You can't do everything, you have to narrow it down.

It's okay to not know everything.

If your goal is to get a corporate job eventually, focus on areas that will help you get that. Probably not much demand for Wi-Fi cracking or password-cracking skills in corporate jobs.

I'm going to mostly ignore some target areas

- Phones and smartphones.

[But Hacker101 - Mobile Hacking Crash Course (video) says mobile app testing shares a lot of commonality with web app testing.] - IoT (article), game consoles, routers, etc.

- Apple products.

- Specialized servers: domain controller, mail server, LDAP, DNS, Active Directory.

- Authentication, encryption.

- Creating or reverse-engineering malware.

- Social engineering.

- Physical penetration.

- Wi-Fi, and the physical layer of Ethernet.

- Cloud services.

What I'll focus on

- Web apps.

- Local apps that connect across network to servers.

- Database servers.

My personal situation

- I have BS and MS degrees in Computer Science.

- I was a programmer for more than 20 years, on Unix and Macintosh and PCs, from 1980 to 2001. Retired now.

- I've programmed in C, a little C++, a little assembler code, Pascal, a little JavaScript, HTML, a little CSS, a little SQL. Wrote a Unix driver and did some Unix kernel work long ago, in the early 80's.

- Now running Linux on my daily desktop.

- Did one little open-source project recently: HTML link checker extension for VS wCode.

Learning

There are so many sites and tools that you can go crazy trying to know about all of them. Find some that work well for you and get started, don't worry about the rest.

Learn from

- HackerOne:

TigaxMT / HackerOne-Lessons (HackerOne video lessons transcribed to PDFs)

- Hacker101:

- Bugcrowd:

samhouston's "Researcher Resources - How to become a Bug Bounty Hunter"

Bugcrowd University (video)

- OWASP:

OWASP Testing Guide v4

- Hack This Site:

Alex Long's "HackThisSite Walkthrough, Part 1 - Legal Hacker Training"

- Pentester Land:

- HackEDU:

Courses and challenges.

Free use for 1 year after registering. - PentesterLab:

- Awesome-Hacking:

-

djadmin / awesome-bug-bounty

Sanyam Chawla's "Bug Bounty Methodology (TTP - Tactics, Techniques and Procedures) V 2.0"

Huge lists of sites and resources and advice. - Mitre's "ATT&CK Matrix for Enterprise":

- Writeups by bug-bounty hunters:

Renaud Martinet's "Tips for bug bounty beginners from a real life experience"

HackerOne's "Hacktivity" (recent bug disclosures)

Peter Yaworski's "Web Hacking 101 - How to Make Money Hacking Ethically"

Old but interesting: Wladimir Palant's "Finding security issues in a website (or: How to get paid by Google)"

ngalongc / bug-bounty-reference (list of write-ups by bug type)

Hacking Articles' "Beginner Guide to Understand Cookies and Session Management"

reddit's /r/hacking

reddit's /r/HowToHack

reddit's /r/bugbounty

Some people say: learn from the bottom up, learn the details of protocols and languages and do exploits manually, or else you're just a script-kiddie pushing buttons on tools you don't understand. I say: there's nothing wrong with knowing only some basics and using existing tools. Then iterate up and down, figure out what's really happening when you execute some script, learn more about the language the web app is using, learn more about features of the tool you're using. Push forward your learning on all levels, bit by bit.

Common Terms/Techniques

- Vulnerability: a security bug or entry point.

- Exploit: use a vulnerability to compromise the system to

the greatest extent possible.

- Payload: a thing you put onto the target machine as part of an exploit. Could be

an executable file, a DLL, an image in memory of some process, a link or script in a page,

a script in a database record, more.

- Proof of Concept (PoC): explain and show a vulnerability and how it can be exploited

to accomplish some bad result.

- Intercepting proxy: when attacking a web site or web application using

a big GUI app such as Burp Suite or OWASP ZAP, you put

an intercepting proxy between your browser and the network. The proxy will

record all requests and responses, let you modify or repeat them, and provide a vehicle

for the scanners and attackers to go out to the network.

- Bind shell: get the target machine to start listening on some port,

and provide a shell to anyone who connects on that port.

- Reverse shell: firewalls, routers and IPSs may be set up to prevent

incoming traffic to the target machine on unexpected or dangerous ports.

So, instead, have the target machine do an outbound connection

to your (attacking, listening) machine, and then provide a shell over that connection.

Bug-Bounty Programs (and other things to join)

hackerone

Bugcrowd

Intigriti

Portswigger (for their "Web Security Academy")

There are lots of other companies, but:

- Some seem region-specific (e.g. India or Asia).

- Some seem industry-specific (e.g. crypto).

- Some emphasize they only want elite hunters.

- Some sound more like contracting shops than open programs.

- Some offer a grand total of only 8 or 10 available targets.

Thuvarakan Nakarajah's "Bug Bounty Guide"

EdOverflow / bugbounty-cheatsheet / bugbountyplatforms.md

Guru99's "Top 30 Bug Bounty Programs in 2019"

Hacks.icu's "Huge list of companies with active bug bounties"

BugBountyNotes' "Public Bug Bounty Program Data" (companies)

nightwatchcyber's "Finding Unlisted Public Bounty and Vulnerability Disclosure Programs with Google Dorks"

Firebounty (part of Yes We Hack)

PlugBounty

In Spanish-speaking countries, search for "Programa de recompensas por deteccion de errores". But I was unable to find any Spain-only bug-bounty programs (I live in Spain).

Subscribe to, or read:

Pentester Land's "The 5 Hacking NewsLetter"

Intigriti's blog

Maybe try a non-monetary (VDP) program:

Om Arora's "How I Found My First 3 Bugs Within An Hour"

Information

- Some key basics to know:

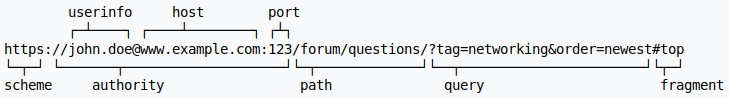

- URL:

Wikipedia's "Uniform Resource Identifier"

Wikipedia's "Uniform Resource Identifier"

Wikipedia's "Data URI scheme"

Wikipedia's "Percent-encoding" (URL encoding)

Interesting: the URL "userinfo" actually can be "username:password", and the password usually won't be logged. Also, the "fragment" usually will stay client-side, not visible to the server.

- HTTP:

Wikipedia's "Hypertext Transfer Protocol"

Jacques Coertze's "Adventures into HTTP2 and HTTP3"

SoByte's "HTTP protocol evolution and features of each version"

Chris Hayner's "Is QUIC too fast for security??"

Wikipedia's "List of HTTP header fields" (request and response)

netsparker's "Whitepaper: Security Cookies"

Cookie flags: Secure means "HTTPS only"; HTTPOnly means "no access by client scripting/DOM".

Site-level policies:

Wikipedia's "Content Security Policy"

Wikipedia's "Subresource Integrity"

Wikipedia's "HTTP Strict Transport Security" (HSTS)

MDN's "Same-origin policy"

Same-origin policy (SOP) is a key security mechanism, preventing AJAX access or DOM access from code in one domain to a server or DOM in another domain. But SOP can be circumvented by changing document.domain, messaging among windows or between windows and a browser extension, or using CORS.

From Chapter 13 "Attacking Users: Other Techniques" in "The Web Application Hacker's Handbook" by Stuttard and Pinto:The same-origin policy does not prohibit one website from issuing requests to a different domain. It does, however, prevent the originating website from processing the responses to cross-domain requests.Zemnmez's "If CORS is just a header, why don't attackers just ignore it?"

Wikipedia's "Cross-origin resource sharing"

MDN's "Cross-Origin Resource Sharing (CORS)"

Dakota Nelson's "CORS Lite"

New header types are being created fairly frequently (e.g. in 2019, the Sec-Fetch-* request headers, and the Cross-Origin-Opener-Policy response header).

If the web-app site is not using the "X-Frame-Options" header, it may be possible to put a web-app page as an invisible frame inside an attacker's page, and get the user to click on it, doing operations using the user's creds.

Laconic Wolf's "Briefly Exploring HTTP Header Vulnerabilities"

Sulinski and Reign's "Security Headers: Whys and Hows"

Tim Perry's "HTTPWTF"

- HTML:

Wikipedia's "HTML"

Wikipedia's "List of XML and HTML character entity references"

w3.org's "Character Entity Reference Chart"

- DOM:

Wikipedia's "Document Object Model"

Ian Bicking's "CSS Object Model"

- JavaScript:

Wikipedia's "JavaScript"

Nick Scialli's "12 Concepts That Will Level Up Your JavaScript Skills" (and continued/expanded in nas5w / javascript-tips-and-tidbits)

Wikipedia's "Ajax (programming)"

To present a dialog to the user: alert(something), confirm(something), prompt(str1,str2)

- PHP:

Wikipedia's "PHP"

article with something interesting about PHP filters

- Python:

Strongly typed, object-oriented, whitespace matters, interpreted.

Wikipedia's "Python (programming language)"

Snakify

"What happens when ..."

ericlaw's "Demystifying Browsers"

The Web Platform: Browser technologies

- URL:

- OSINT:

Steve Micallef's "OSINT Resources for 2019"

OSINT Framework

should-i-trust Chrome extension

Kody's "Use the Buscador OSINT VM for Conducting Online Investigations"

Kody's "Conduct OSINT Recon on a Target Domain with Raccoon Scanner"

Tokyo Neon's "Quickly Look Up the Valid Subdomains for Any Website" (Sublist3r)

OccupyTheWeb's "How to Conduct Passive Reconnaissance of a Potential Target"

Mr Falkreath's "AmIDoinItRite?" (Google Dorking)

Intrigue-core

jivoi / awesome-osint

randhome's "2019 OSINT Guide"

OSINT Framework

Shodan:

Hacking Articles' "Shodan a Search Engine for Hackers (Beginner Tutorial)"

Skerritt.blog's "Shodan - The Complete Guide"

Jean Fleury's "Shodan: The Internet of Things Search Engine"

Jean Fleury's "Tango Down: Shodan's Full Potential"

Jean Fleury's "How I Audited An Entire Organization In 15 Minutes"

Shodan often has a special sale on lifetime account for $5 on "Black Friday" each year ?

BinaryEdge:

BinaryEdge

Some says it's better than Shodan.

Google dorks:

Hacking Articles' "Beginner Guide to Google Dorks (Part 1)"

SecurityTrails' "Exploring Google Hacking Techniques"

Alexis' "Google Hacking For Penetration Testing"

Google Hacking Database

clarketm / google-dorks"

Crunchbase

Buscador

Open jobs listed on job sites; often they specify the technology the company is using.

- Web Apps:

ZeroSec's "LtR101: Web Application Testing Methodologies"

ZeroSec's "LTR101: WebAppTesting - Methods to the Madness"

ZeroSec's "Web Application Testing - Tooling"

Mr. Nakup3nda's "Successfully Hack a Website in 2016!"

OccupyTheWeb's "How to Hack Web Apps, Part 1 (Getting Started)"

- Vulns / Exploits:

Distortion's "Top 10 Exploit Databases for Finding Vulnerabilities"

OccupyTheWeb's "How to Find Exploits Using the Exploit Database in Kali"

Occupy4eles's "Easily Find an Exploit in Exploit DB and Get It Compiled All from Your Terminal"

OccupyTheWeb's "How to Find Almost Every Known Vulnerability & Exploit Out There" (SecurityFocus)

OccupyTheWeb's "How to Find the Latest Exploits and Vulnerabilities - Directly from Microsoft"

CMU / CERT's "Vulnerability Notes Database"

NIST's "National Vulnerability Database"

Mitre's "CVE List Home"

Flexera's "Vulnerabilities Discovered or Coordinated by Secunia Research"

SecuriTeam

SecurityTracker

Symantec's "Vulnerabilities"

Microsoft's "TechNet"

HackerStorm

McAfee Threat Center

SecurityFocus / Symantec Connect / Bugtraq

Open Bug Bounty

Security Space (compatible with OpenVAS)

Exploit Database

SearchSploit

If you run into unfamiliar tech, maybe get an intro at:

tutorialspoint's "Tutorials Library"

OverAPI

W3Schools

Rosetta Code's "Category:Programming Tasks" (see same app implemented in N different languages)

Challenges

I'm focused on single-person mostly-web-app challenges and labs.

I'm focused on single-person mostly-web-app challenges and labs.

CTF (Capture The Flag) challenges tend to be team-based and often in-person and/or within a specified time-period, and more about cracking encryption or binary files or reverse-engineering etc (although some include web apps), I think. I'm not interested in those. Maybe see Capture The Flag 101.

Each challenge could be:

- On a public web site that you access across the internet.

- A VM image that you install and run on your system.

- An app that you install on top of a web server, in a VM on your system or on a server in your LAN.

For some challenges, mainly XSS, you need an external web site the victim will access, and a way for you to pick up the params they sent to that site. See External web site.

[First few are in order I suggest doing them:]

- Portswigger's "Web Security Academy":

Have to create a free account. Basic web app vulnerabilities. I did most of the SQL ones manually with just browser and Code Beautify to do encoding, but skipped the Blind SQL Injection labs because that seemed too tedious.

Fired up OWASP ZAP and went back to the first SQL injection lab. Did an automatic attack and it found two forms of SQL injection vulnerability.

Tried doing the XSS challenges manually, and got pretty lost. Some of them require using an external server. Skipped those and kept going. You learn more if you don't just paste in the strings supplied in the tutorial, first look at the lab page source to figure out why the string is going to work. - Hacker101 CTF:

All web-app challenges.

I started doing these as my very first challenges, and quickly felt like a complete idiot, despite all the reading I've been doing. I'm stuck halfway through the 2nd set, "Easy (2 / flag) Micro-CMS v1", and hint after hint is not helping me, I've already tried the things they're hinting about, and some hints are things like "if you can't go in the door, try the window". I'm only using a normal browser; maybe I'm supposed to fire up Burp Suite or something and look at request and response headers ? But I don't think so. Took a look at the 3rd set, got a little traction, but also stuck. Got more hints, fired up Burp Suite and messed with things, no luck, and there are no more hints.

Slowly made progress, skipping challenges where I got stuck, coming back later to some of them, getting hints. It's important to keep notes.

For each challenge, I start manually in the browser, just trying the app and doing "view source" and trying various inputs. Then later I fire up Burp or OWASP ZAP and start modifying and repeating requests.

Later they added a couple of Android challenges. They generate an APK file, which you then have to load into an emulator (see ) or into a real Android phone.

Solutions, Notes and Spoilers:- A little something to get you started:

- Do "View background image", get flag whose value ends "5793".

- Micro-CMS v1:

- Edit a page, put something like <a onload="alert(1);"> in Body field, Save edit, see page, view source, get flag whose value ends "1ae0".

- Edit a page, put something like <a onload="alert(1);">111</a> in Title field, Save edit, see page, click on Go Home, get alert with flag whose value ends "17ce".

- ...

- Micro-CMS v2:

- In any Username input field, put a single-quote, see SQL error.

- Try to log in, it tells you which is wrong, username or password.

- Database is MariaDB (MariaDB SQL Statements ).

- In login page, put 'or'1=1 in username field, says bad password.

- Hint says what can normal user do in Micro-CMS v1 that they can't do in v2 ?

Edit markdown page via /page/edit/2

Edit test page via /page/edit/1

Create new page via /page/create

But with GET they just give login in v2.

- Encrypted Pastebin:

- Submitted a post to encrypt, got back page showing URL like "/?post=xxxxxxxxxxxxxxxxxxxxxxxxxx", did a get from "/?post=test", and got a huge set of error messages starting with a flag whose value ends "4d98".

- Error message told me they're using AES CBC in UTF-8 with IV of 16 bytes.

Also shows them doing

base64.decodestring(x.replace('~', '=').replace('!', '/').replace('-', '+'))

But trying to decode the URL strings got me nowhere. - Single-quote is not being escaped when submitted to server.

- Tracking.gif contains nothing interesting, 43 bytes, no EXIF.

- Hint says do XOR.

- Photo Gallery:

- "/fetch?id=1" returns raw data of image, not with proper content type.

- Value of "id" in URL can be a numeric expression (such as 6/3) or hex (0x2) or a string that starts with a number ("1garbage"), but not an expression inside a string where first char is not valid ID value ("3/3"), and not a numeric expression that evaluates to a non-integer ("1.2").

- The two JPEG files don't have flags inside them or in their EXIF data.

- The web server does not allow POSTs.

- Hint says: what would the query for fetch look like ?

- Hint says: app runs on uwsgi-nginx-flask-docker.

I don't know what to do with that info.

Miguel Grinberg's "Flask Mega-Tutorial"

- Cody's First Blog:

- Go to "/?page=admin.auth.inc", get admin login page.

- Go to "/?page=admin.inc", get flag whose value ends with "faa6", and you're admin.

- Go to "/?page=admin", get interesting error messages about file inclusion.

- Go to "/?page=index", get interesting error message.

- SSRF ? Go to "/?page=http://www.google.com/" or "/?page=http://localhost/index". Maybe it does DNS resolution but then doesn't actually fetch anything. I created a PHP file on my personal web site, and it wouldn't fetch it.

- App is using PEAR, so tried some filenames related to that.

- Going to "/?page=home.inc" is valid.

- Going to "/admin.auth.inc.php" is valid, gives you just part of the page you get for "/?page=admin.auth.inc".

- Going to "/home.inc.php" is valid, gives you just part of the page you get for "/?page=home.inc".

- Going to "/index.inc.php" or "/?page=index.inc" fails.

- Going to "/index.php" is same as going to "/".

- As admin, go to "/?page=admin.inc&approve=2" to approve a comment.

- Go to "/admin.inc.php", get interesting error messages. Database is MySQL.

- Post a comment that is PHP (<?php phpinfo(); ?>), get flag whose value ends with "0853".

- ...

- Postbook:

- Logged in as normal user, went to My Profile, changed "id" parameter in URL to "b", now was in admin account. Went to that account's "Dear diary" secret post, got flag whose value ends "7cfd".

- Editing a post, changed "id" parameter to "2", now was editing admin's secret post, saved it, and got flag whose value ends "170a".

- Logged in as user/password, got flag whose value ends "4d61".

- Hint said "189*5", went to "/index.php?page=view.php&id=945" got flag whose value ends "59a2".

- PHP files are: index, profile, create, account, sign_out, view, edit, delete.

- User ids are consecutive integers (1, 2, 3, ...).

- Post ids in edit links are consecutive integers (1, 2, 3, ...); post ids in delete links are 32-char hex strings (turns out they're MD5 hashes).

- Computed MD5 hash of a post id for a different user, went to "/index.php?page=delete.php&id=MD5HASHVALUE", and got flag whose value ends "1cf8".

- Ticketastic: Demo Instance:

- Go to "/ticket?id=9", get interesting error message.

- Go to "/ticket", get interesting error message with "cur.execute('SELECT title, body, reply FROM tickets WHERE id=%s' % request.args['id'])" in it.

- Login as admin/admin.

- Ticketastic: Live Instance:

- Admin login page tells you which is wrong, username or password. Username is "admin" ?

- Go to "/admin", get "not logged in".

- Go to "/ticket?id=9", get "not logged in".

- Go to "/newUser", get "not logged in".

- If you submit a ticket with a body that contains an URL, it fetches the contents of that URL ?

- ...

- Petshop Pro:

- Use Burp or OWASP ZAP proxy to repeat check-out post with a modified price, get flag whose value ends "fa6d".

- Go to "/login", get a login page. It tells you which is wrong, username or password.

- Go to "/static", get an error "unable to connect to http://127.0.0.1/static/" !

- ...

- Model E1337 - Rolling Code Lock:

- Input such as "+3" or "-3" is accepted, but expression such as "3+3" throws error.

- Tried Burp Sequencer but it refused to do character-level analysis even with over 500 samples.

- Hints say try XML, app runs on uwsgi-nginx-flask-docker. Flask is a templating language. Tried various "{{password=0}}" kinds of things, but no luck.

- ...

- TempImage:

- Files upload.php, doUpload.php, directory /files

- Only PNG files allowed. And it checks the format; upload an HTML file named "something.png" fails.

- Upload a file, it goes to "/files/32CHARHEXSTRING_FILENAME.png".

32CHARHEXSTRING is always the same for a given filename, so must be derived from it.

It is the MD5 hash of the filename (including .png).

Mangle hex string and do GET, 404 from "Apache/2.4.7 (Ubuntu) Server at 127.0.0.1 Port 46938". - There is a hidden form field "filename".

- Go to "/doUpload.php?id=1", get interesting error msgs.

- ...

- A little something to get you started:

- VulnHub:

Ceos3c's "Basic Pentesting 1 Walkthrough"

Ceos3c's "Basic Pentesting 2 Walkthrough"

grokdesigns' "VulnHub Walkthrough - LazySysAdmin"

aisherwood's "DroopyOS"

aisherwood's "Mr. Robot Vulnhub Write Up"

Peleus's "pWnOS 2.0"

Jayson Grace's "Vulnhub - Sedna"

tarih's "Raven Walkthrough"

Leigh's "Vulnhub.com - Mr. Robot 1 CTF Walkthrough"

- BugBountyNotes:

- Google Gruyere:

- LiveOverflow:

- Enigma Group:

- picoCTF:

- HackThis !!:

- HackThisSite.org:

- Hack The Box (.eu):

Circle Ninja's "Beginner Tips to Own Boxes at HackTheBox !"

0xRick

Elyes Chemengui's "Mischief Hackthebox Write-up"

Shahzada AL Shahriar Khan's "HackTheBox - Mischief Writeup"

- Google CTF:

Jack Halon's "Google CTF (2018): Beginners Quest - Introduction"

- Pentestit Labs:

Lots of walkthroughs at Jack Halon's "Posts"

- ThisIsLegal:

- Valhalla's "Challenges Overview":

- Damn Vulnerable Web Application (DVWA):

blackMORE Ops' "Setting up Damn Vulnerable Web Application (DVWA) - Pentesting Lab"

- OWASP Juice Shop Project:

Contains some 87 challenges ?

Takhion's "Beginner's Guide to OWASP Juice Shop, Your Practice Hacking Grounds for the 10 Most Common Web App Vulnerabilities"

reddit's /r/owasp_juiceshop

- OWASP WebGoat Project:

- Buggy Web Application (bWAPP):

- try2hack:

- Wizard Labs:

- Root Me:

- W3Challs:

- exploit.education:

- Under the Wire:

Windows PowerShell challenges.

- OverTheWire:

Connect through SSH to a CLI. Very first one in Bandit is via "ssh -p 2220 bandit0@bandit.labs.overthewire.org". At each stage, you have to find a password which will let you log in to the next stage. For each stage, the web page (e.g. https://overthewire.org/wargames/bandit/bandit8.html) gives you hints about how to find the password.

The first set, "Bandit", covers some CLI and filesystem and file naming stuff, then some text processing, then some SSL stuff. I got stuck at 21, gave up.

Ceos3c's "OverTheWire Bandit Walkthrough Part 1 - Level 0 - 5"

Ceos3c's "OverTheWire Bandit Walkthrough Part 2 - Level 6 - 10"

Ceos3c's "OverTheWire Bandit Walkthrough Level 10 - 15"

amanhardikar's "Penetration Testing Practice Lab - Vulnerable Apps / Systems" (ignore big image, see lists of sites; huge, don't get lost in here)

EdOverflow / bugbounty-cheatsheet / practice-platforms.md

blackMORE Ops' "124 legal hacking websites to practice and learn"

OWASP Vulnerable Web Applications Directory Project (click on tabs across the top of page)

bitvijays's "CTF Series : Vulnerable Machines" (lots of techniques)

"Web Penetration Testing Lab setup" articles in Hacking Articles' "Web Penetration Testing"

CyberX's "Hacking Lab Setup" (more than just lab setup)

Cyber Security Blog (a few walkthroughs)

Hacking Articles' "CTF Challenges" (lots of walkthroughs)

From discussion on reddit 1/2019:

> Real life box vs vulnhub/hackthebox vm

> For those who had real life exp. How similar or different was it?

Some are similar a lot are not. Real world is primarily misconfiguration and out of date software

...

Exactly true. Also, in real life you don't see nearly as many ports exposed as you see on many of these boxes. It's quite rare to see a machine in real life that has 80 and 443 open to the world that also has anything else open like 22, 25, 445 etc.

> For those who had real life exp. How similar or different was it?

Some are similar a lot are not. Real world is primarily misconfiguration and out of date software

...

Exactly true. Also, in real life you don't see nearly as many ports exposed as you see on many of these boxes. It's quite rare to see a machine in real life that has 80 and 443 open to the world that also has anything else open like 22, 25, 445 etc.

Miscellaneous

Lots of hackers and bounty-hunters are on Twitter; that seems to be the standard way to communicate. But I don't like Twitter, and I have no need to get the latest news right away. Better to read articles and books and documentation, learn tools and techniques, try challenges.

OTOH, sometimes bounty-hunters post tips on Twitter. To follow someone on Twitter without having an account yourself, try Queryfeed, Twitter-searching on "@theirusername" to get an RSS feed of just their tweets. Or you could just go to "twitter.com/theirusername". Some possible usernames to follow: Jhaddix, yaworsk, DanielMiessler, bugcrowd, intigriti, Hacker0x01, thegrugq.

Using Queryfeed, you can follow hashtags such as: #ethicalhacker.

threader.app ?

If you find a bug by accident

If you're not in a bug-bounty program (maybe the company doesn't even have such a program), and you find a serious security bug:

- Do NOT just release the bug information into the wild.

- If you actively worked to find or exploit the vulnerability, you may have violated the law.

- See Reporting section for advice about replicating and documenting the bug.

Contacting the company:

Hacks.icu's "Huge list of companies with active bug bounties"

Cybersecurity Transparency Project's "Security Contact Search"

OWASP's "Vulnerability Disclosure Cheat Sheet"

Brendan Hesse's "How to Submit a Bug Report to Apple, Google, Facebook, Twitter, Microsoft, and More"

For open-source components:

Bruce Mayhew's "Sonatype and HackerOne eliminate the pain of reporting open source software vulnerabilities"

huntr

- Look for file "/.well-known/security.txt" on company's web site.

- Do a search for "companyname responsible disclosure"

or "companyname bug bounty" to see if they're in any bug-bounty program.

- Look on the company web site for a Security or Bug-Reporting or Technical Support email address or page.

Last resort: look for a Data Privacy officer.

- Maybe you have to create a user account on their site to be able to contact them.

- OWASP's "Vulnerability_Disclosure_Cheat_Sheet"

- A few companies have onion (Tor) sites for maximum anonymity:

alecmuffett / real-world-onion-sites

- Look on GitHub to see if the company has relevant projects there.

One way to verify a contact if you're not sure: get them to put some file (containing a code you give them) on the company's main web site.

The company may accept the report gracefully, or they may be hostile and/or call law-enforcement.

If the company says "that's not a vulnerability" or "that's not important", consider that they may be right. But if you disagree, and can't convince them, you might say "okay, so you give me permission to publish an article about it ?".

Instead of contacting the company directly, you could contact:

- HackerOne's "Disclosure Assistance"

- Zero Day Initiative

- ZeroDisclo.com

- SSD Secure Disclosure

- CISA Coordinated Vulnerability Disclosure (CVD) Process

- Open Bug Bounty - Report

- Disclose.io community forum

- FireBounty

- A well-known ethical hacker / security researcher such as

Troy Hunt

or

Brian Krebs

or

Tod Beardsley (director of research at Rapid7).

- In a really tough case, you could try contacting a regulator for the company's industry,

or the company's insurance company, or their legal department, or one of their big customers.

You might want to consult a lawyer first.

Things probably will go smoother if you don't ask for money, just leave it up to the company to decide if they want to reward you. If you do ask for money, keep the amount reasonable. But whichever way you decide, I would be open about that pretty early in the process, as soon as you get into contact with security-type people.

Don't expect an instant response. You're an unknown guy coming in on a non-standard channel, not part of any program. They may see a lot of scammers and false alarms, and they're busy with other work. A smaller company may get very few security reports each year, and not have a clear process for dealing with them.

Don't expect a quick fix and quick permission to disclose. They may have to test in current version, find it's real, test in N older versions, develop fix for each version, test fix in each version, make patches available to customers, inform customers, find out if/when customers applied the patches. It can be a LONG process.

From people on reddit:

> When is a good time to start working on small paying bounties?

I think one of the biggest mistakes people make is thinking that they need to know it all before starting. Or, when they read writeups that the author knew all the techniques and details already when they went hunting.

The reality is, sure it happens that you fully understand all the details of the system you're targeting and the exploit you find, but plenty of times you do research either into the target system or into different techniques. And you spend hours doing research, that time doing research is there but often invisible in writeups.

As long as you've got the fundamentals, the best thing you can do it just get started and learn as you go. It's absolutely okay to spend hours researching on dead ends and feel like you've made no progress. You never know where those little tidbits you learned will come to assist you later.

...

... it's very easy reading the solution once it's all laid out in a few simple commands. And this often leads to the impression that it was also *discovered* very easily too. But as the you said, this is not necessarily the case. All the "head banging against the wall" isn't apparent in a nice clean write-up.

Done so far

- Started 12/2018. Tons of reading.

- Made a live-session Kali image on USB flash drive:

- Downloaded Kali 2018.4 ISO and used Mint "Disks" app to write to flash drive.

- Added persistence by doing in CLI

(per Adding Persistence to a Kali Linux "Live" USB Drive):

end=7gb read start _ < <(du -bcm kali-linux-2018.4-amd64.iso | tail -1); echo $start sudo parted /dev/sdc mkpart primary $start $end sudo mkfs.ext3 -L persistence /dev/sdc3 sudo e2label /dev/sdc3 persistence sudo mkdir -p /mnt/my_usb sudo mount /dev/sdc3 /mnt/my_usb cd /mnt/my_usb # had to do sudo chmod 777 . to make next line work sudo echo "/ union" >persistence.conf # sudo chmod 755 . to restore permissions to original cd ~ sudo umount /dev/sdc3

- Downloaded Kali 2018.4 ISO and used Mint "Disks" app to write to flash drive.

- Booted from Kali live session USB, selecting "Live (forensic mode)",

which means hard disk will not be touched. Booting got stuck for a couple

of minutes waiting for "LSB - thin initscript". Login password is "toor", but I wasn't asked for it.

Distro has lots of tools installed; doesn't have OpenVAS. Has Burp Community Edition. - Tested, and "Live (forensic mode)" doesn't have persistence.

"Live with persistence" mode does have persistence.

Both modes usually spend minutes waiting for "LSB - thin initscript" at boot time.

- Was going to install OpenVAS on Kali, but the live session is just too slow at everything.

- Tried to install OpenVAS on my normal Mint desktop; see

OpenVAS.

Finally gave up.

- Joined HackerOne and Bugcrowd.

- Installed Burp Suite CE and got the basics working.

- More tons of reading.

- Upgraded my laptop's RAM from 3 GB to 8 GB, so I can run VMs etc.

And just have a faster machine in general.

- Installed VirtualBox and a Xubuntu VM and learned a bit about that.

- Tried to install Dradis, got stuck. Support not helpful.

- Started doing challenges on hacker101, quickly got stuck and feeling

like an idiot. Worked through it over the next week or so, skipping

to other challenges when I got stuck.

- Installed OWASP ZAP and got the basics working.

- 2/2019: Did some Hacker101 CTF's

and got to a level where I got an invitation

from a private program. It was a VDP (no money) program. Declined invitation because

I think my skills are not good enough yet.

- Started on OverTheWire Bandit,

got stuck at 21, trying to find port something is listening on.

- 3/2019: Got sidetracked into creating a desktop app to do bounty-hunting project management.

I want the app, but also it's an excuse to learn Bootstrap, ng-bootstrap, Angular, Electron, (already

knew a bit about) Node.js, (already knew a bit about) SQL.

- Gave up on app development in Angular, changed to Java.

- Started on Portswigger's "Web Security Academy"

- 6/2019: Got sidetracked into creating a Firefox add-on to import/export Container settings:

ContainersExportImport.

Learned a lot about Firefox add-ons and the browser API, but the Containers architecture is horrible

and the add-on is near-useless.

- 8/2019: Creating a Firefox add-on to replace Decentraleyes add-on:

DecentraleyesSimpler.

- 9/2019: Decided to just pick something real and give it a try.

Chose https://hackerone.com/hyatt Created a test account on the site and started looking at

their main page. Immediately started learning some new things about HTML etc. Useful.

- Frustrated that I'm not progressing faster, but it's my own fault, I'm distracted by

N other things and not putting in the time I should on actual bug-hunting. Family stuff, medical

stuff, moving my web site to use Hugo, learning, developing a Thunderbird extension, more.

- First half of 2020, I'm focusing more on general learning: Linux, containers, a little Python,

moving from Mint to Ubuntu, zfs and Btrfs, learning GitHub multi-contributor project use, more.

- Second half of 2020, still general learning and some development, and I think it

will pay off when I do some bug-bounty. In particular, refining my personal web site has

taught me a lot I didn't know about HTML and CSS.

- First half of 2021, a bit stuck on starting. Did develop a couple of Android apps.

Still learning a lot about Linux and security etc.